The extravagant tool: Kubernetes

Discussing the overview of Kubernetes...

Kubernetes is the prevalent word in the draft of technologies. Being a segment of the technical world, it’s of utmost significance to get an insight into what, why, how of any modish tool or technologies around. Talking about one such latest and overviewed tool: Kubernetes.

History

To go further we must trace back a little. Grasping the general history of any tool develops an ambitious approach towards a better perceptiveness.

Kubernetes is also known as k8 tracebacks its origin to the year 2014. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation.

Along with the Kubernetes v1.0 release, Google partnered with the Linux Foundation to form the Cloud Native Computing Foundation (CNCF)and offered Kubernetes as a seed technology. Kubernetes builds upon a decade and a half of experience at Google running production workloads at scale using a system called Borg, combined with best-of-breed ideas and practices from the community

On March 6, 2018, Kubernetes Project reached ninth place in commits at GitHub, and second place in authors and issues, after the Linux kernel.

What is Kubernetes?

Knowing about the history of Kubernetes lets us step into what exactly the tool is.

Kubernetes is a popular open-source platform for container orchestration in simple words I would say for the management of applications built out of multiple, largely self-contained runtimes called containers. It is also used for automating computer application deployment, scaling, and management.

What are the containers?

The word is very often used with Kubernetes. So the foremost thing that comes in is to grab a quick introduction towards this term.

A Container is a standard unit of software that packages up code and all of its dependencies so the application runs quickly and reliably from one computing environment to another. Containers are the solution to the problem of how to run the application reliably when moved from one machine to another. Like moving from:

- Developer’s System to Test Environment.

- One Developer’s system to another Developer’s System, etc…

Why Kubernetes?

Containers have become increasingly popular since the Docker containerization project launched in 2013. but large, distributed containerized applications can become increasingly difficult to coordinate. By making containerized applications dramatically easier to manage at scale, Kubernetes has become a key part of the container revolution.

Benefits of Kubernetes

Kubernetes introduces new abstractions and concepts, and because the learning curve for Kubernetes is high, it’s solely normal to ask what the long-term payoffs are for using Kubernetes.

Kubernetes(k8s) is:

- A way to orchestrate those containers together.

- Aside: Also we need new kinds of capacity scaling …

- k8s provides horizontal (complexity), and secondarily, vertical (capacity) scaling

- Complexity: More services in the application

- Capacity: Scaling capacity usually involves replication

- Self-healing

- Blue-green deployments (Blue-green deployments are a pattern whereby we reduce downtime during production deployments by having two production environments (“blue” and “green”)).

✍Kubernetes manages app health, replication, load balancing, and hardware resource allocation for you.

✍Kubernetes simplifies the management of storage, secrets, and other application-related resources.

Basic Kubernetes terminologies :

Kubernetes cluster :

The cluster refers to the group of machines running Kubernetes. A Kubernetes cluster must have a master, the system that commands and controls all the other Kubernetes machines in the cluster.

Kubernetes Nodes and Pods :

Nodes are the sub-division of clusters. They may be defined as virtual machines or physical machines.

Nodes run pods, the most basic Kubernetes objects that can be created or managed. Each pod represents a single instance of an application or running process in Kubernetes and consists of one or more containers. Kubernetes starts, stops, and replicates all containers in a pod as a group.

Growth of Kubernetes over the years :

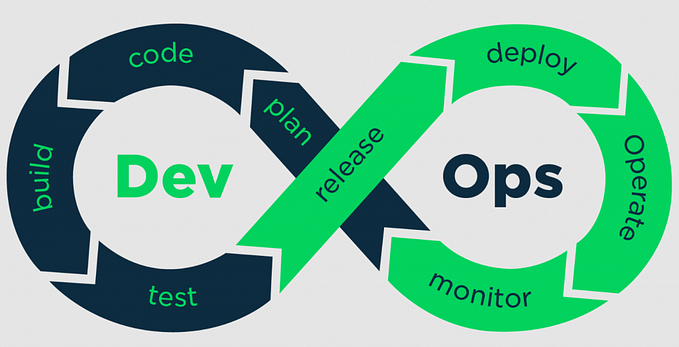

The rapid adoption of container technology, DevOps practices and principals, microservices application architectures, and the rise of Kubernetes as the de facto standard for container orchestration are the key drivers of modern digital transformation.

Tinder’s Engineering Team recently announced their move to Kubernetes to solve scale and stability challenges. Twitter is another company that has announced its own migration from Mesos to Kubernetes. New York Times, Reddit, Airbnb, and Pintrest are just a few more examples.

Kubernetes Experiences 50% Growth in Just 6 Months

Several cloud and infrastructure providers have started to offer their own managed Kubernetes services including Google (GKE), Amazon (EKS), Microsoft (AKS), Red Hat (Red Hat OpenShift), and Docker Enterprise Edition — Kubernetes, a large portion of Kubernetes users prefer to self-manage their clusters.

From the expert’s corner :

“Twitter’s switch from its initial adoption of Mesos to the use of Kubernetes Native today proves again the assertion that Kubernetes has become an industry standard for container orchestration. More importantly, Twitter’s embrace of Cloud Native is expected to provide a classic learning model for the large-scale implementation of the cloud-native technology in production,” said Zhang Lei, Senior Technical Expert on Alibaba Cloud Container Platform and Co-maintainer of Kubernetes Project.

The prominence of any technology evolves into the limelight when we follow the real life use case of the tool. All of now, we have discussed the emerging trend of Kubernetes along with some apps using it. Bringing one such case of tinder to you :

Tinder using Kubernetes:

Tinder is an American geosocial networking and online dating application that allows users to anonymously swipe to like or dislike other profiles based on their photos, a small bio, and common interests.

Almost two years ago, Tinder decided to move its platform to Kubernetes. Kubernetes afforded an opportunity to drive Tinder Engineering toward containerization and low-touch operation through immutable deployment. Application build, deployment, and infrastructure would be defined as code.

Problems encountered :

They were looking to address the challenges of scale and stability. When scaling became critical, they suffered through several minutes of waiting for new EC2 instances to come online.

Kubernetes into play :

During migration in early 2019, they reached a critical mass within the Kubernetes cluster. They solved interesting challenges to migrate 200 services and run a Kubernetes cluster at scale totaling 1,000 nodes, 15,000 pods, and 48,000 running containers.

There are more than 30 source code repositories for the microservices that are running in the Kubernetes cluster. The code in these repositories is written in different languages (e.g., Node.js, Java, Scala, Go) with multiple runtime environments for the same language.

The build system is designed to operate on a fully customizable “build context” for each microservice, which typically consists of a Dockerfile and a series of shell commands. While their contents are fully customizable, these build contexts are all written by following a standardized format. The standardization of the build contexts allows a single build system to handle all microservices.

Kubernetes Cluster Architecture And Migration

Cluster Architecture

They decided to use kube-aws for automated cluster provisioning on Amazon EC2 instances. Early on, they were running everything in one general node pool. To separate out workloads into different sizes and types of instances, to make better use of resources. The reasoning was that running fewer heavily threaded pods together yielded more predictable performance results.

Migration

One of the preparation steps for the migration from our legacy infrastructure to Kubernetes was to change existing service-to-service communication to point to new Elastic Load Balancers (ELBs) that were created in a specific Virtual Private Cloud (VPC) subnet. This subnet has peered to the Kubernetes VPC.

Conclusion :

To sum up the entire article I would draw the spot of attention to the growing trend of Kubernetes. The trend of containerization has undoubtedly spiked up Kubernetes and the services it provides. It has saved time for handling different servers manually. Also providing scaling of systems when the need arises. In addition has made deployment, management way more efficient and easier.