The Concept Of LVM

The article deals with integrating the concept of LVM: Logical Volume Management with the Hadoop cluster. LVM is a concept of providing limited storage by managing optimally the storage attached to the cluster.

What is Hadoop Cluster?

Apache Hadoop is an open-source, Java-based, software framework and parallel data processing engine. It enables big data analytics processing tasks to be broken down into smaller tasks that can be performed in parallel by using an algorithm (like the MapReduce algorithm) and distributing them across a Hadoop cluster.

A Hadoop cluster is a collection of computers, known as nodes, that are networked together to perform these kinds of parallel computations on big data sets.

Storage in Hadoop?

A single NameNode tracks where data is housed in the cluster of servers, known as DataNodes. Data is stored in data blocks on the DataNodes. HDFS replicates those data blocks, usually 128MB in size, and distributes them so they are replicated within multiple nodes across the cluster.

Is it possible to increase or distribute the size of storage of the Datanode to the Hadoop Cluster ????????

The answer is YES. This is made possible through LVM

What is LVM?

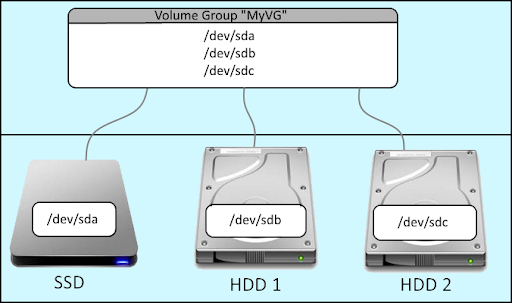

LVM is a tool for logical volume management which includes allocating disks, striping, mirroring, and resizing logical volumes. With LVM, a hard drive or set of hard drives is allocated to one or more physical volumes. LVM physical volumes can be placed on other block devices which might span two or more disks.

The physical volumes are combined into logical volumes, with the exception of the /boot partition. The /boot the partition cannot be on a logical volume group because the boot loader cannot read it. If the root (/) partition is on a logical volume, create a separate /boot partition which is not a part of a volume group.

Since a physical volume cannot span over multiple drives, to span over more than one drive, create one or more physical volumes per drive.

The volume groups can be divided into logical volumes, which are assigned mount points, such as /home and / and file system types, such as ext2 or ext3. When "partitions" reach their full capacity, free space from the volume group can be added to the logical volume to increase the size of the partition. When a new hard drive is added to the system, it can be added to the volume group, and partitions that are logical volumes can be increased in size.

Creating LVM

Now, we are aware of the basic meaning of LVM. Let us see how we achieve this concept ……

Step 1: Create and attach external storage.

Step 2: Convert the storage into physical volume. Since physical volume can only contribute to the volume group, it's necessary to convert the storage into PV.

Step 3: Create a volume group and add the previously created PV into it.

Step 4: This volume group is the new storage. To use any storage we need to format it, create partitions in it, and mount it.

These partitions created are known as Logical volumes or lv. Unlike static partitions, it is possible to create as many lv’s as one wants, keeping in mind the storage capacity. This lvm can be managed to increase or decrease storage. Hence it is termed as Logical Volume Management.

Integrating LVM with Hadoop!

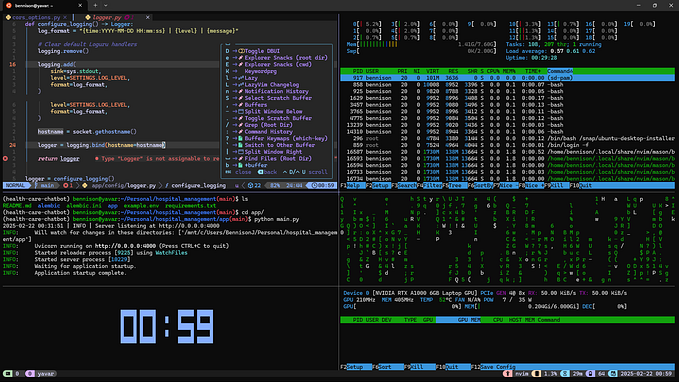

You can perform the task in AWS by attaching EBS, preferably two or more, or in local VM by attaching an external hard disk to your data node. These hard disks will create lvm which will provide specific storage to the cluster.

Use fdisk -l command to check the hard disk.

Converting into a physical volume

Physical block devices or other disk-like devices (for example, other devices created by device-mapper, like RAID arrays) are used by LVM as the raw building material for higher levels of abstraction. Physical volumes are regular storage devices. LVM writes a header to the device to allocate it for management.

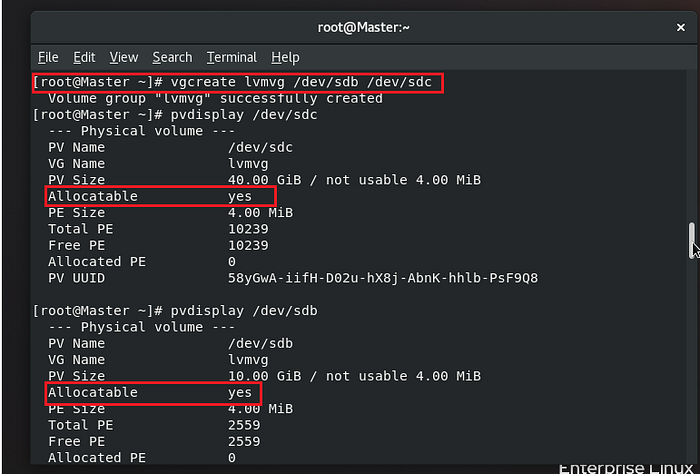

Convert the attached storage into physical by using

pvcreate /dev/sbb

pvcreate /dev/sbc

You can check the pv created using the pvdisplay command. You can see that it is not allocated yet. For the same create, a volume group.

Create a volume

All physical volumes into a single hub are known as volume groups. Volume groups abstract the characteristics of the underlying devices and function as a unified logical device with a combined storage capacity of the component physical volumes.

Use the command vgcreate name name_of_harddisk

Now, you can see the already created physical volumes are allocated to the vg.

Creating partitions :

the partitions here are known as Logical volume.

Create the lv by the command lvcreate — size <value> — name lvname vgname

To use this lv we need to format it using the mkfs command . We will mount this formatted lv to the folder which is created in datanode to share the storage .

Formatting and mounting

Use the below-mentioned command to format the lv.

mkfs.ext4 /dev/vgname/lvname

Now in the data node, you can change the value of the folder contributing storage. The data node now contributes to the storage created by Lvm.

The storage can be checked by running Hadoop services on the data node.

hadoop dfsadmin -report

Increasing the size of storage

Since lvm are dynamic in nature you can increase the size of lvm . But it's important to note that the volume group should have enough storage to increase the storage. In case vg doesn't support more storage you can increase it by attaching another harddisk likewise performing the steps .

lvextend --size <value> /dev/vgname/lvname

Logical Volume has resized successfully so you have to just mount it with /datanode folder using the below command.

resize2fs /dev/vgname/lvname